Step Back Propting - Let's make LLM think about questions

Unveiling a New Horizon in NLP: Enhancing Reasoning through Abstraction and Human-Inspired Techniques

Generated by MidJourney (https://midjourney.com/)

Generated by MidJourney (https://midjourney.com/)In the ever-evolving landscape of Natural Language Processing (NLP), the introduction of new methodologies and techniques plays a crucial role in pushing the boundaries of what is possible. Today, I am excited to delve into a paper that stands at the forefront of this innovation, exploring a novel technique named STEP-BACK PROMPTING, designed to enhance the multi-step reasoning capabilities of Transformer-based Large Language Models (LLMs). This paper caught my attention for its human-centric approach to problem-solving.

Tackling the Challenge of Complex Reasoning with Step-Back Prompting

Complex multi-step reasoning stands as a formidable challenge in the field of NLP, even amidst the significant advancements we have witnessed. Existing techniques such as process-supervision and Chain-of-Thought prompting have made strides in addressing these issues, but they fall short of completely overcoming the hurdles.

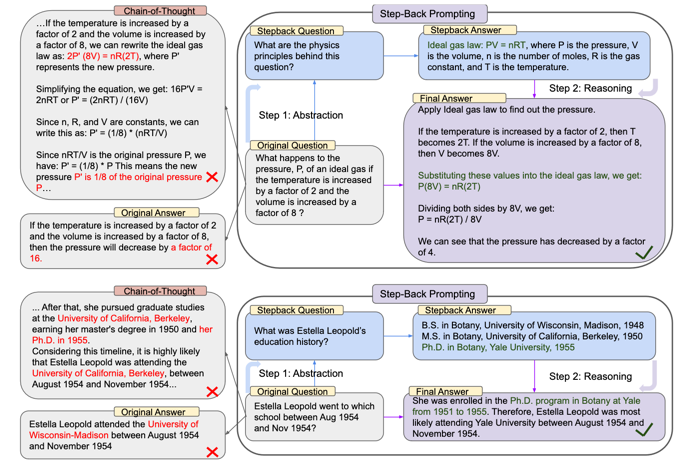

Step-Back Prompting is proposed as a beacon of hope in this complex landscape. It introduces a two-step process of abstraction and reasoning, designed to mitigate errors in intermediate reasoning steps and enhance the overall performance of LLMs. This method stands out for its ability to ground complex reasoning tasks in high-level concepts and principles, bringing about a new era of possibilities in NLP.

Empirical Validation and Performance Gains with Step-Back Prompting

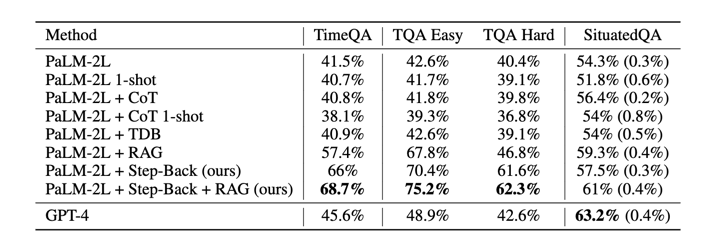

The paper provides a robust empirical validation of the Step-Back Prompting technique. The TimeQA test set, known for its challenging nature, serves as the benchmark for this evaluation.

The results are telling. While baseline models like GPT-4 and PaLM-2L exhibit the challenging nature of the TimeQA task with accuracies of 45.6% and 41.5% respectively, the introduction of Step-Back Prompting, especially when combined with Regular retrieval augmentation (RAG), marks a significant leap in performance, achieving an accuracy of 68.7%. This is not just an improvement over baseline models, but also a substantial enhancement compared to other prompting techniques such as Chain-of-Thought and Take a Deep Breathe.

The technique’s proficiency becomes even more evident when diving into different difficulty levels of the TimeQA task. While all models struggled more with “Hard” questions, Step-Back Prompting, in conjunction with RAG, showcased a remarkable ability to navigate these complex scenarios, significantly improving performance.

Conclusion and Future Directions

The paper concludes by positioning Step-Back Prompting as a generic and effective method to invoke deeper reasoning capabilities in large language models through the process of abstraction. The extensive experimentation across diverse benchmark tasks bears testament to the technique’s ability to significantly enhance model performance.

The underlying hypothesis is that abstraction enables models to minimize hallucinations and reason more accurately, tapping into their intrinsic capabilities that often remain hidden when responding to detailed questions directly. This paper is not just a presentation of a novel technique; it’s a call to action, urging the community to embrace human-inspired approaches and unlock the true potential of large language models.

The journey of enhancing the reasoning abilities of large language models is far from over. However, the introduction of Step-Back Prompting marks a significant stride forward, providing a solid foundation and a clear direction for future advancements in the field of Natural Language Processing.